- #Download spark 2.10. bin hadoop2.7 tgz line command pdf#

- #Download spark 2.10. bin hadoop2.7 tgz line command driver#

- #Download spark 2.10. bin hadoop2.7 tgz line command archive#

- #Download spark 2.10. bin hadoop2.7 tgz line command download#

Hadoop Map Reduce can’t process data fast and Spark doesn’t have its own Data Storage so they both compensate for each other’s drawbacks and come strong together. So, we store data in the Hadoop File System and use YARN for resource allocation on top of which we use Spark for processing data fast. While Apache Hadoop is a framework which allows us to store and process big data in a distributed environment, Apache Spark is only a data processing engine developed to provide faster and easy-to-use analytics than Hadoop MapReduce. This allows future actions to be much faster (often by more than 10x). When you persist an RDD, each node stores any partitions of it that it computes in memory and reuses them in other actions on that dataset (or datasets derived from it). One of the most important capabilities in Spark is persisting (or caching) a dataset in memory across operations. This design enables Spark to run more efficiently.

#Download spark 2.10. bin hadoop2.7 tgz line command driver#

The transformations are only computed when an action requires a result to be returned to the driver program. Instead, they just remember the transformations applied to some base dataset. e.g.: REDUCE.Īll transformations in Spark are lazy, meaning, they do not compute their results right away.

#Download spark 2.10. bin hadoop2.7 tgz line command pdf#

Spark interview Questions PDF Q2) What is an RDD in Apache Spark? It also supports an upscale set of higher-level tools including Spark SQL for SQL and structured processing of data, MLlib for machine learning, GraphX for graph processing, and Structured Streaming for incremental computation and stream processing. It provides an optimized engine that supports general execution of graphs. It provides high-level APIs (Application Programming Interface) in multiple programming languages like Java, Scala, Python and R. Top Spark Interview Questions: Q1) What is Apache Spark?Īpache Spark is an Analytics engine for processing data at large-scale. The blog will cover questions that range from the basics to intermediate questions. This blog will help you understand the top spark interview questions and help you prepare well for any of your upcoming interviews. If you’re facing a Spark Interview and wish to enter this field, you must be well prepared.

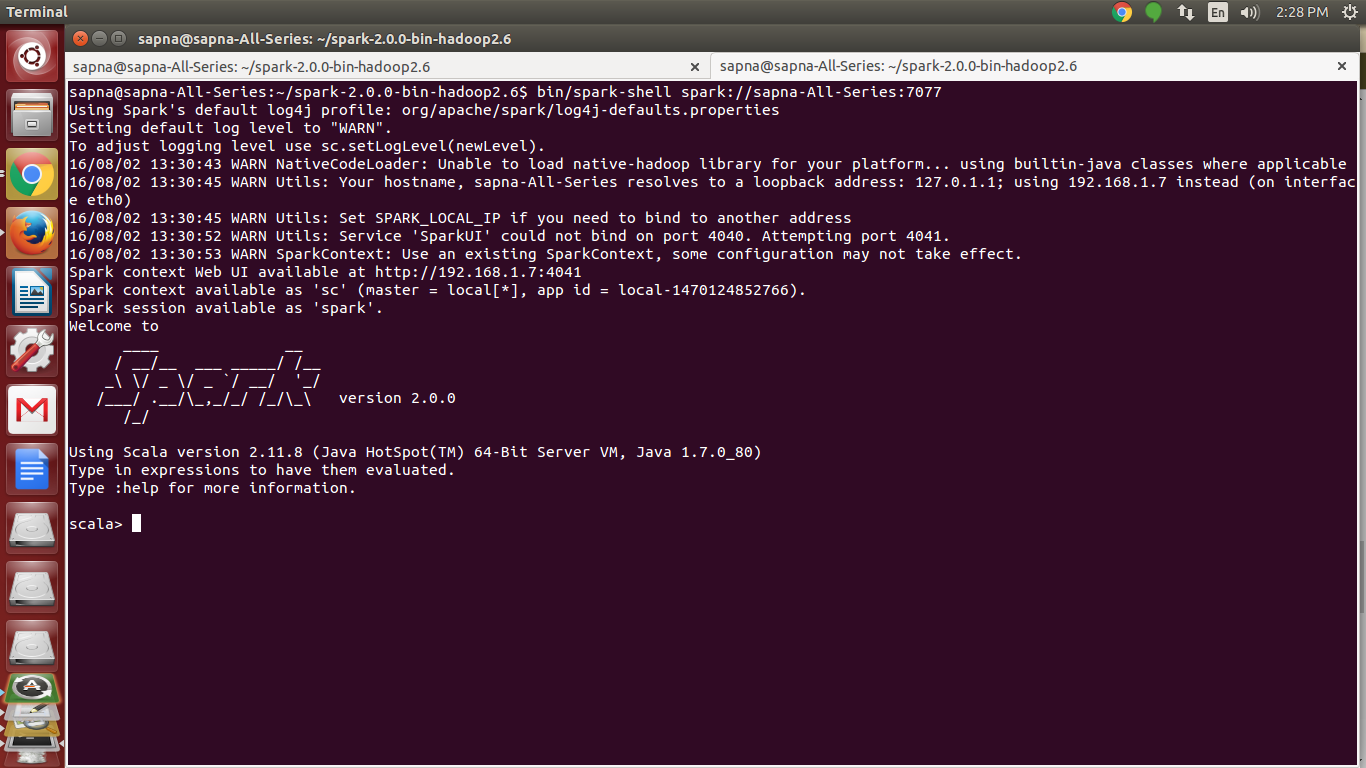

When the profile loads, scroll to the bottom of the file.Spark is an open-source framework that provides an interface for programming entire clusters with implicit data parallelism and fault tolerance. profile file in the editor of your choice, such as nano or vim.įor example, to use nano, enter: nano. You can also add the export paths by editing the. profile: echo "export SPARK_HOME=/opt/spark" > ~/.profileĮcho "export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin" > ~/.profileĮcho "export PYSPARK_PYTHON=/usr/bin/python3" > ~/.profile Use the echo command to add these three lines to. There are a few Spark home paths you need to add to the user profile.

Configure Spark Environmentīefore starting a master server, you need to configure environment variables. If you mistype the name, you will get a message similar to: mv: cannot stat 'spark-3.0.1-bin-hadoop2.7': No such file or directory. The terminal returns no response if it successfully moves the directory. Use the mv command to do so: sudo mv spark-3.0.1-bin-hadoop2.7 /opt/spark The output shows the files that are being unpacked from the archive.įinally, move the unpacked directory spark-3.0.1-bin-hadoop2.7 to the opt/spark directory.

#Download spark 2.10. bin hadoop2.7 tgz line command archive#

Now, extract the saved archive using tar: tar xvf spark-*

#Download spark 2.10. bin hadoop2.7 tgz line command download#

Remember to replace the Spark version number in the subsequent commands if you change the download URL. Note: If the URL does not work, please go to the Apache Spark download page to check for the latest version.

0 kommentar(er)

0 kommentar(er)